Brand Stories

When Trump Turns Artificial Intelligence into a Political Weapon

Tehran – BORNA – At the core of this plan is the Trump administration’s clear attempt to centralize power at the federal level and eliminate state authority in AI regulation. In the initial version of a new tax bill, a provision was included that would have banned any state-level AI legislation for 10 years. Although this provision was removed by the Senate with a decisive vote of 99 to 1, Trump continues to pursue this goal through indirect means.

Under this plan, only states that do not have “heavy” regulations on AI would be eligible for AI-related funding. However, the concept of “AI-related funding” is so vague that, according to experts, any discretionary funding, from broadband financing to support for schools and infrastructure, could fall under this definition.

Grace Gaddy, a policy analyst, warned that this ambiguity is intentionally designed to put states in a complicated position.

Will the FCC Become the Regulator of AI?

A more concerning part of the plan is where the President calls for the involvement of the Federal Communications Commission (FCC) in regulating AI. According to the plan, the FCC must examine whether state regulations pose a barrier to the agency’s duties under the Communications Act of 1934. However, the FCC has no prior experience in regulating algorithms, websites, or social networks and is not even considered a privacy authority.

Cody Vancek, senior policy advisor at the American Civil Liberties Union (ACLU), stated, “The idea that the FCC would have oversight over AI is nothing but a distortion of the Communications Act. This agency is not a comprehensive technology regulator, and such authority has not been defined for it.”

One of the new executive orders signed by Trump in line with this plan is titled “Preventing Woke AI in the Federal Government,” which prohibits government agencies from using AI systems with “ideological bias.” The order stipulates that large language models must be “neutral, nonpartisan,” and “truth-seeking,” avoiding the reproduction of values such as diversity, equity, and inclusion (DEI).

Indirect Pressure on Technology Companies

While the government is trying to impose its ideological standards on AI providers, companies are facing a major challenge, including receiving government contracts or the risk of losing access to the massive government market.

OpenAI has introduced a version of ChatGPT specifically for government agencies called “ChatGPT Gov,” and XAI has launched “Grok for Government.” If these models are designed based on the government’s requirements, we will likely soon see the impact of these policies in public versions as well.

Legal or Illegal? That Is the Question

Many experts believe that the Trump administration’s attempt to prevent state-level AI regulation is likely legally indefensible. However, when a government repeatedly violates the law and the judiciary fails to take a stand, the “illegality” no longer serves as an effective deterrent.

About the author: Fateme Moradkhani covers technology, surveillance, and AI ethics for Borna News Agency, with a focus on global cyber power and digital militarization.

End article

Brand Stories

Industry policies and technological innovation in artificial intelligence clusters: are central positions superior?

Cluster identification

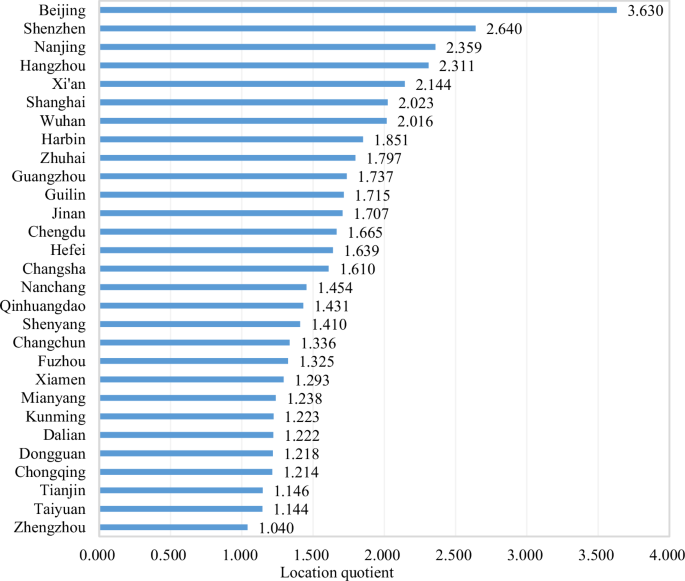

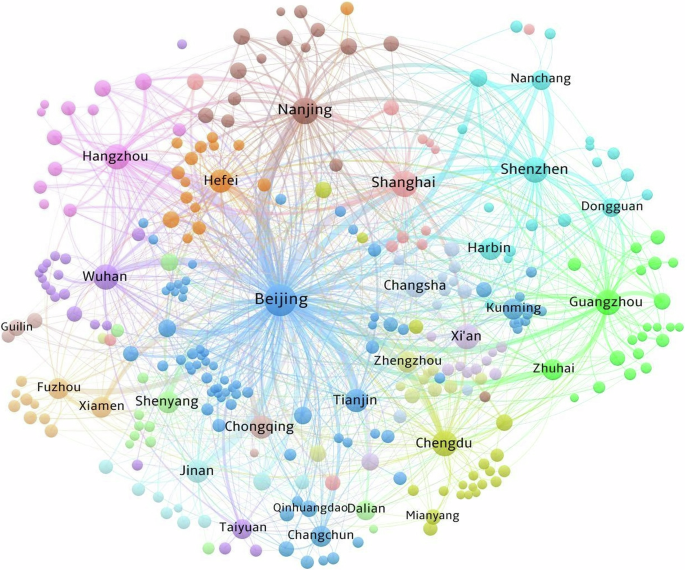

This research calculates the LQ of innovation output across the administrative regions in the AI industry. The results show that there are thirty-three cities whose LQ is greater than 1.Footnote 17 Four regions (Fuxin, Jilin, Sanya, and Shanwei) that do not meet the threshold of the innovation scale are excluded.Footnote 18 The twenty-nine retained regions (Beijing, Shenzhen, Nanjing, Hangzhou, Xi’an, Shanghai, Wuhan, Harbin, Zhuhai, Guangzhou, Guilin, Jinan, Chengdu, Hefei, Changsha, Nanchang, Qinhuangdao, Shenyang, Changchun, Fuzhou, Xiamen, Mianyang, Kunming, Dalian, Dongguan, Chongqing, Tianjin, Taiyuan, and Zhengzhou) are regarded as potential AI clusters. The potential AI clusters are illustrated in Fig. 2.

To investigate the local and external innovation connections of potential AI clusters, we calculate the total volume of intraregional and interregional collaborations for these clusters, respectively. The outcomes are depicted in Table 1. The high total volume of intraregional collaborations in clusters such as Beijing, Shenzhen, Nanjing, Guangzhou, Shanghai, Hangzhou, Zhuhai, Wuhan, Chengdu, Tianjin, Jinan, and others indicates their focus on internal innovation activities. The high total volume of interregional collaborations among clusters such as Beijing, Nanjing, Shenzhen, Hangzhou, Shanghai, Guangzhou, Wuhan, Chengdu, Jinan, Tianjin, Nanchang, Hefei, and others indicates their emphasis on external resource acquisition. In conclusion, all twenty-nine potential AI clusters have both intraregional and interregional innovation collaborations, satisfying the criteria for cluster identification.

Because the total volume of interregional collaborations exceeds that of intraregional collaborations in each AI cluster, we can conclude that interregional cooperation is the main form of collaboration for AI clusters. Further, the innovation network of interregional cooperation is constructed in this research. To be specific, we match each region involved in interregional cooperation patents with other regions.Footnote 19 Connections containing the AI clusters are regarded as network edges. Using the total collaboration volume as the weight of network nodes and the number of collaborations as the weight of network edges, we depict the innovation network for the AI clusters in Fig. 3. We find that the innovation network containing the AI clusters is highly dense. Beijing occupies an absolutely central position. The roles of Nanjing, Hangzhou, and Shanghai as significant cities in Eastern China are prominently highlighted. Shenzhen and Guangzhou, two major cities in Southern China, serve as pivotal hubs. Wuhan and Chengdu, representing Central and Western China, respectively, actively engage in innovation collaborations with other areas.

Descriptive statistics

This study performs descriptive statistics for all the variables. The outcomes are displayed in Table 2. The mean values of TI, IP, and NC are 5.898, 2.006, and 2.682, respectively. Moreover, the standard deviations of TI and IP are relatively high, which elucidates that gaps in both TI and IP exist across different AI clusters.

Correlation analysis

We observe a positive correlation between IP and TI from Table 3, which indicates that IP may enhance TI in the AI clusters. The correlation coefficients between each variable are less than 0.800. In addition, this research conducts a variance inflation factor (VIF) test. The maximum VIF of 3.410 is lower than 10, illustrating that there is no severe concern about multicollinearity in this study (e.g., Chen et al., 2024).

Regression analysis

The results of regression analysis of IP and TI are reported in Table 4. All p-values of AR(1) are less than 0.1, while those of AR(2) are greater than 0.1, indicating the presence of first-order autocorrelation but the absence of second-order autocorrelation in the random disturbance term. We thus accept the null hypothesis of “no autocorrelation in the random disturbance term”. Furthermore, the p-values of the Sargan test are all greater than 0.1, which illustrates that the problem of overidentification of instrumental variables does not exist, thereby allowing for estimation using System-GMM.

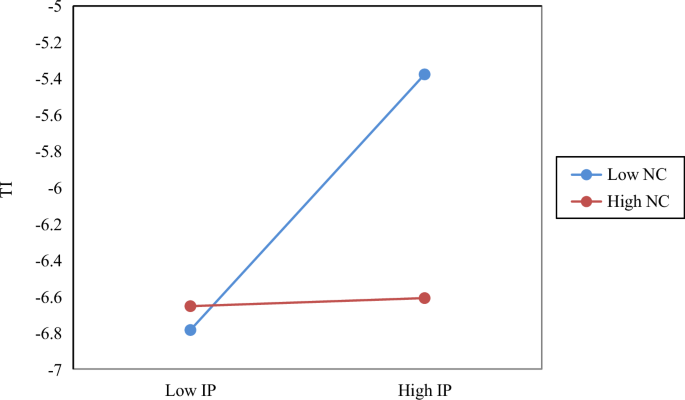

Model l contains only the control variables. The results displayed in Model 2 indicate that the coefficient of IP on TI is 0.037 and significant. IP can promote TI in the AI clusters. That is, the positive effects of IP on TI dominate over the negative ones. Moving on to Model 3, the interaction between IP and NC (i.e., IP*NC) is significantly negative, which illustrates that the high NC of clusters diminishes the positive impact of IP on TI in the AI clusters. Further, this paper visualizes the moderating effect of NC on the IP–TI connection. As shown in Fig. 4, IP responds to less TI with a high value of NC.

Robustness checks

Sensitivity analysis

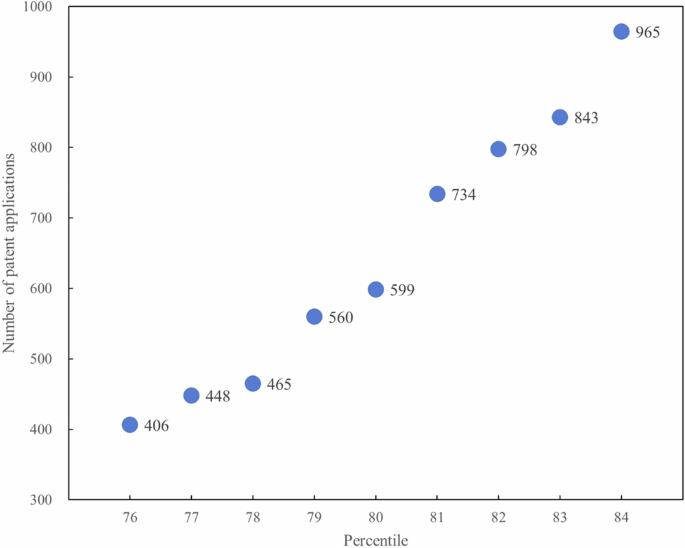

Quartiles are a scientifically and widely used classification method. In this section, we use the third quartile (i.e., the 75th percentile) instead of the 80th percentile for reidentifying potential clusters. The 75th percentile of patent applications for all regions is 386. The four regions (Fuxin, Jilin, Sanya, and Shanwei) are still excluded (see Supplementary Table B), which is consistent with the conclusion in Section “Cluster identification”. In addition, to avoid overlapping with the 75th percentile, we allow a fluctuation of ±4 percentage points around the 80th percentile and conduct multiple tests between the 76th and 84th percentiles. The results are shown in Fig. 5. The threshold for patent applications falls between 374 and 969 when a certain degree of error exists, and the aforementioned four regions remain excluded. Therefore, the conclusion of the twenty-nine AI clusters identified in this paper is robust.

Placebo test

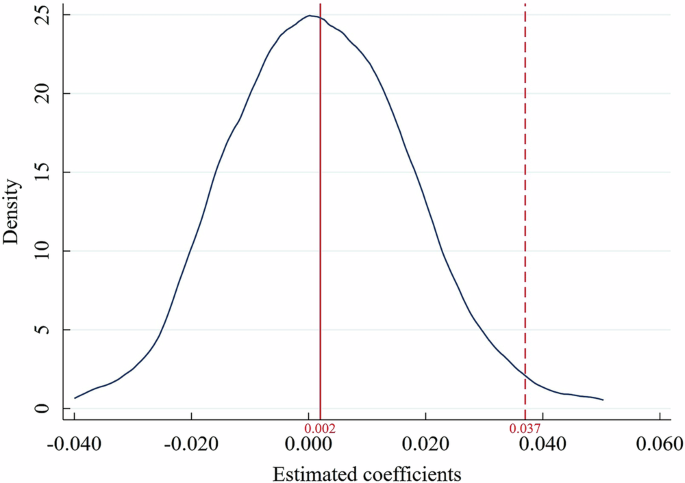

Conducting a placebo test serves to rule out the possibility that random factors unrelated to IP account for the observed effects, thereby guaranteeing a more reliable estimation. Drawing on the study of Zeng et al. (2024), we randomly shuffle IP across all clusters and then estimate the impact of randomly assigned IP on TI using System-GMM regression. This procedure is repeated 1000 times. If the correlation between IP and TI still exists, the previous conclusions are due to randomness rather than IP. The kernel density of the estimated coefficients plotted in Fig. 6 indicates that the coefficient of IP is 0.002, which is far from the real IP coefficient of 0.037. Therefore, the improvement of TI is driven by IP, not by random factors.

Policy penetration

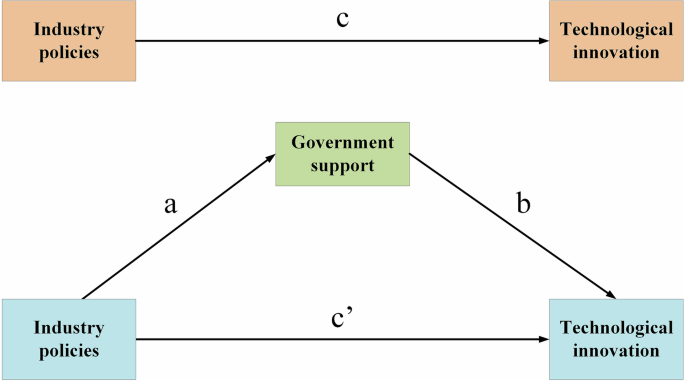

Measuring IP by the number of incentive policies may not reflect implementation rigor. Enterprises act as the principal agents of innovation (Lei and Xie, 2023). To assess the impact of IP more accurately, we examine whether IP effectively reaches enterprises in practice. To be specific, the penetration process for IP is achieved in the following sequence: the release of IP documents, the government support (GS) obtained by enterprises, and the generation of TI. Drawing on the study of Xu et al. (2023), this article constructs the following mediation effect model:

$${{\rm{TI}}^{\wedge}}_{\rm{jt}}={\varphi }_{1}+{\lambda }_{1}{{{\rm{TI}}}^{\wedge}}_{{\rm{jt}-1}}+{{\rm{cIP}}}_{{\rm{jt}}}+{\gamma }_{11}{{\rm{Control}}}_{{\rm{jt}}}+{\eta }_{{\rm{j}}}+{\varepsilon }_{{\rm{jt}}}$$

(4)

$${\rm{G}}{S}_{{\rm{jt}}}={\varphi }_{2}+{\lambda }_{2}{\rm{G}}{S}_{{\rm{jt}}-1}+{{\rm{aIP}}}_{{\rm{jt}}}+{\gamma }_{21}{{\rm{Control}}}_{{\rm{jt}}}+{\eta }_{{\rm{j}}}+{\varepsilon }_{{\rm{jt}}}$$

(5)

$${{\rm{TI}}^{\wedge}}_{\rm{jt}}={\varphi }_{3}+{\lambda }_{3}{{{\rm{TI}}}^{\wedge}}_{{\rm{jt}-1}}+{{\rm{c}}^{\prime} {\rm{IP}}}_{{\rm{jt}}}+{\rm{bG}}{S}_{{\rm{jt}}}+{\gamma }_{31}{{\rm{Control}}}_{{\rm{jt}}}+{\eta }_{{\rm{j}}}+{\varepsilon }_{{\rm{jt}}}$$

(6)

where \({{{\rm{TI}}}^{\wedge}}_{\rm{jt}}\) represents the number of invention patent applications of enterprise \({\rm{j}}\) in year \({\rm{t}}\). \({{\rm{IP}}}_{{\rm{jt}}}\) is the number of incentive policies in the cluster where enterprise \({\rm{j}}\) is located. \({{\rm{GS}}}_{{\rm{jt}}}\) is measured by the government subsidies of enterprise \({\rm{j}}\) (e.g., Chen and Wang, 2022b). \({\text{Control}}_{\text{jt}}\) represents control variables, including enterprise profitability (EP), capital structure (CS), and operating capacity (OC). EP is calculated by the return on total assets (e.g., Chen and Wang, 2022a). The asset‒liability ratio stands for CS (e.g., Peng and Tao, 2022). OC is represented by the asset turnover ratio (e.g., Xu and Chen, 2020). The other symbols serve the same purpose as Eq. (3).

The mediation mechanism is plotted in Fig. 7. If the coefficients c, a, and b are all significant, GS has a mediating effect (Zhong and Zhang, 2024).

We acquire an AI enterprise list from the China Stock Market and Accounting Research (CSMAR) database.Footnote 20 Based on geographical location, we select 125 firms within clusters as our research samples.Footnote 21 The enterprise data come from the CSMAR database. System-GMM estimation is applied to investigate the mediating impact of GS. As displayed in Table 5, all p-values for AR(1) are less than 0.1, while those for AR(2) and the Sargan test exceed 0.1; thus, the adoption of System-GMM is appropriate. The results show that the coefficients for IP → TI^, IP → GS, and GS → TI^ are all significantly positive at the 1% level. Hence, IP promotes TI^ by strengthening GS. The conclusion verifies the penetration effect of IP.

Changing the regression model

Poisson regression is usually used for models where the dependent variable is count data. In this study, the dependent variable is “excessively dispersed”, i.e., the mean is less than the variance.Footnote 22 We thereby adopt negative binomial regression. The results displayed in Table 6 indicate that the coefficient of IP is significantly positive and that the coefficient of interaction between IP and NC is significantly negative. Therefore, the empirical outcomes of this research are reliable.

Brand Stories

AI’s last mile just got a supercomputer, courtesy of ASUS and NVIDIA

They say that the most difficult part of transportation planning is last-mile delivery. A network of warehouses and trucks can bring products within a mile of almost all customers, but logistical challenges and costs add up quickly in the process of delivering those goods to the right doors at the right time.

There’s a similar pattern in the AI space. Massive data center installations have empowered astonishing cloud-based AI services, but many researchers, developers, and data scientists need the power of an AI supercomputer to travel that last mile. They need machines that offer the convenience and space-saving design of a desktop PC but go well above and beyond the capabilities of consumer-grade hardware, especially when it comes to available GPU memory.

Enter a new class of AI desktop supercomputers, powered by ASUS and NVIDIA.

The upcoming ASUS AI supercomputer lineup, spearheaded by the ASUS ExpertCenter Pro ET900N G3 desktop PC and ASUS Ascent GX10 mini-PC, wield the latest NVIDIA Grace Blackwell superchips to deliver astounding performance in AI workflows. For those who need local, private supercomputing resources, but for whom a data center or rack server installation isn’t feasible, these systems provide a transformative opportunity to seize the capabilities of AI.

Scaling up memory to meet the parameter count of large AI models

A key piece of the puzzle for accelerating locally run AI workloads is available GPU memory. If a given model doesn’t fit into local memory, it may run very slowly, or it may not run at all. The 32GB of VRAM provided by the highest-end NVIDIA consumer-grade graphics card on the market, the NVIDIA GeForce RTX 5090, is sufficient for many smaller models. But scaling up your system’s VRAM to handle models with even more parameters isn’t necessarily a straightforward affair.

Multi-GPU systems are a feasible solution for some users, but others have been looking for a solution that’s designed specifically for the needs of AI workflows. By equipping the Ascent GX10 and ExpertCenter Pro ET900N G3 with large single pools of coherent system memory, we’re able to put astonishing quantities of memory at your fingertips. The Ascent GX10 wields four times as much GPU memory as a GeForce RTX 5090, while the ExpertCenter Pro ET900N G3 offers up to 784GB — over twice as much GPU memory as a workstation equipped with four NVIDIA RTX PRO™ 6000 GPUs.

AI supercomputer performance in a desktop PC form factor

Designed from the ground up for AI workflows, the ASUS ExpertCenter Pro ET900N G3 will be one of the first pioneers in a new class of computers, based on the NVIDIA DGX station.

This system is powered by the NVIDIA GB300 Grace Blackwell Ultra Desktop Superchip. Featuring an NVIDIA Blackwell Ultra GPU and an NVIDIA Grace CPU connected via the NVIDIA® NVLink®-C2C interconnect, this superchip provides a slice of data center performance in a desktop workstation. Even more so than today’s high-end desktop systems, the ExpertCenter Pro ET900N G3 ensures that businesses and researchers can develop and run large-scale AI training and inference workloads thanks to up to 784GB of large coherent memory.

It all runs on the NVIDIA AI Software Stack including NVIDIA DGX OS, a customized installation of Ubuntu Linux purpose-built for optimized performance in AI, machine learning, and analytics applications, with the ability to easily scale across multiple NVIDIA DGX Station systems.

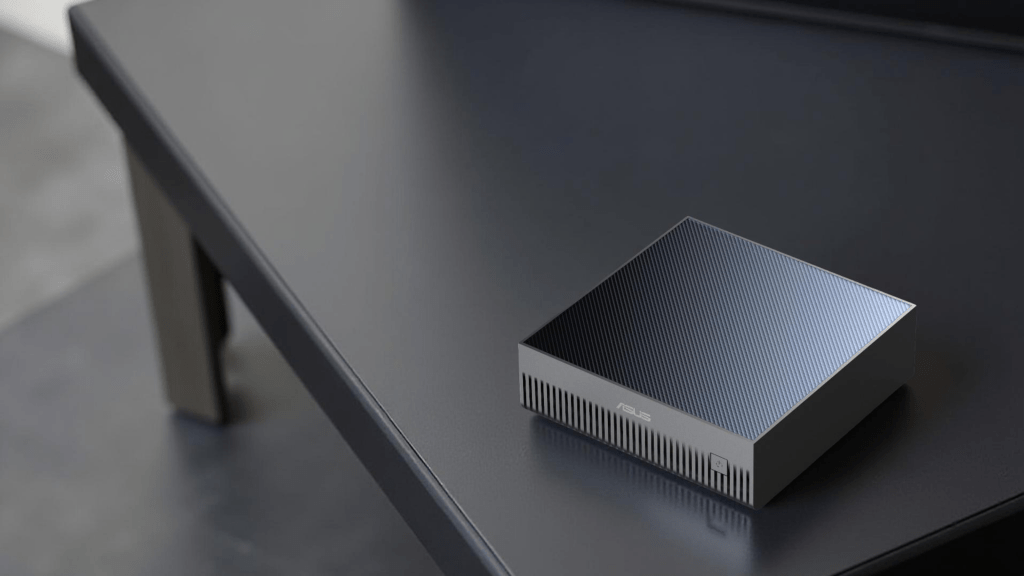

The AI supercomputer in the palm of your hand: the ASUS Ascent GX10

The ASUS ExpertCenter Pro ET900N G3 is much easier to deploy than a solution based on rack servers, but there are situations where even a desktop-class form factor is still too large. The ASUS Ascent GX10 democratizes AI by putting petaflop-scale AI computing capabilities in a design that you can hold in the palm of your hand.

The ASUS NUC lineup demonstrates our proven expertise in offering complete PC experiences in ultracompact designs. No mere iterative step forward, the Ascent GX10 takes our experience in the mini-PC market and melds it with the groundbreaking performance of the NVIDIA GB10 Grace Blackwell Superchip. This superchip connects a Grace CPU with 20 Arm cores with a robust Blackwell GPU through NVIDIA® NVLink®-C2C technology. All told, it delivers up to 1,000 AI TOPS of processing power, with 128GB of coherent unified system memory allowing the system to handle 200 billion parameter AI models.

Need the Ascent GX10 to handle even larger models, such as Llama 3.1 with its 405 billion parameters? Integrated NVIDIA® ConnectX®-7 Network Technology allows you to harness the AI performance of two Ascent GX10 systems working together.

Part of a complete AI solution set

ASUS stands out from every other manufacturer on the market with the breadth of AI products that we’re able to offer. The ExpertCenter Pro ET900N G3 and Ascent GX10 slot into a complete lineup that meets the needs of AI enthusiasts at every level. For those looking to build their own AI PC out of consumer-grade components, for those who need AI performance built into their everyday laptop, for enterprises who need a single-rack AI server solution, even for those institutions looking to design, deploy, and operate a data center for AI applications, the ASUS product portfolio is ready.

Yet the ExpertCenter Pro ET900N G3 and Ascent GX10 are far more than mere additions to our AI product stack. The jump from AI PC to AI supercomputer is nothing less than revolutionary, and these systems give you this level of performance in a complete turnkey solution that fits on a desktop.

Aspects of these systems are still in development, but we’ll share more details as soon as we’re able. In the meantime, explore how ASUS can help your organization seize the capabilities of AI.

Brand Stories

ALL Accor Booking & Loyalty

ALL.com and the ALL.com app deliver seamless digital booking and so much more. This true one-stop shop for travel and daily life sits at the heart of how we deliver exceptional guest experiences while driving measurable growth and value.

Greatest choice, best price: easily accessible and seamlessly bookable, All.com amplify brand visibility and showcase hotels to attract new guests.

Diversifying our offer: ALL.com places our complete ecosystem – from restaurants to meeting solutions and more – at clients’ fingertips, unlocking incremental revenue for our properties.

Powerful business driver: ALL.com delivers visibility, scale, and reputation, driving direct booking, fostering long-term loyalty, and reducing distribution costs for hotel owners.

-

Brand Stories2 weeks ago

Brand Stories2 weeks agoBloom Hotels: A Modern Vision of Hospitality Redefining Travel

-

Brand Stories2 weeks ago

Brand Stories2 weeks agoCheQin.ai sets a new standard for hotel booking with its AI capabilities: empowering travellers to bargain, choose the best, and book with clarity.

-

Destinations & Things To Do3 weeks ago

Destinations & Things To Do3 weeks agoUntouched Destinations: Stunning Hidden Gems You Must Visit

-

Destinations & Things To Do2 weeks ago

Destinations & Things To Do2 weeks agoThis Hidden Beach in India Glows at Night-But Only in One Secret Season

-

AI in Travel3 weeks ago

AI in Travel3 weeks agoAI Travel Revolution: Must-Have Guide to the Best Experience

-

Brand Stories1 month ago

Brand Stories1 month agoVoice AI Startup ElevenLabs Plans to Add Hubs Around the World

-

Brand Stories4 weeks ago

Brand Stories4 weeks agoHow Elon Musk’s rogue Grok chatbot became a cautionary AI tale

-

Brand Stories2 weeks ago

Brand Stories2 weeks agoContactless Hospitality: Why Remote Management Technology Is Key to Seamless Guest Experiences

-

Asia Travel Pulse1 month ago

Asia Travel Pulse1 month agoLooking For Adventure In Asia? Here Are 7 Epic Destinations You Need To Experience At Least Once – Zee News

-

AI in Travel1 month ago

AI in Travel1 month ago‘Will AI take my job?’ A trip to a Beijing fortune-telling bar to see what lies ahead | China

You must be logged in to post a comment Login