Brand Stories

Industry policies and technological innovation in artificial intelligence clusters: are central positions superior?

Cluster identification

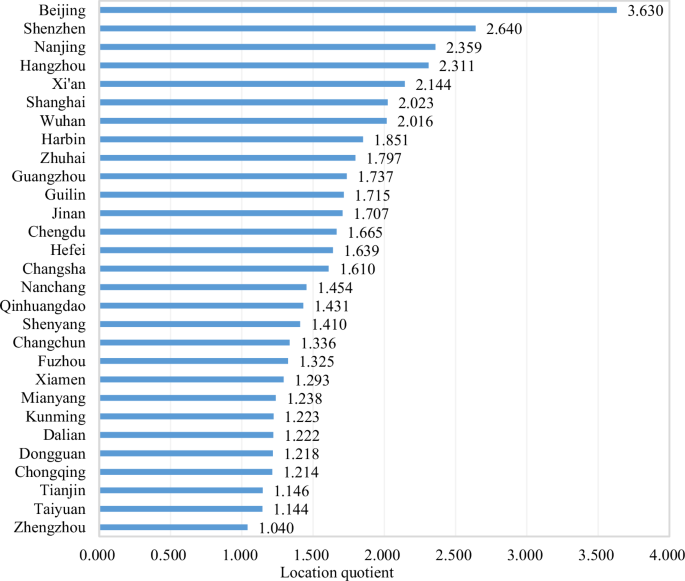

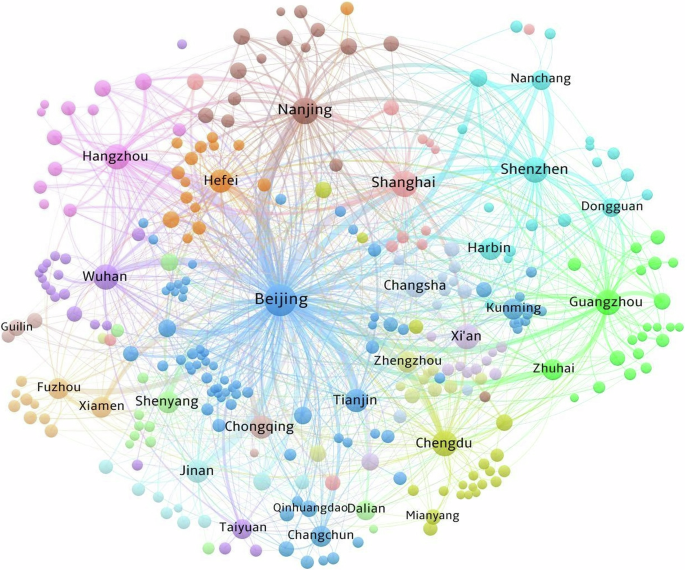

This research calculates the LQ of innovation output across the administrative regions in the AI industry. The results show that there are thirty-three cities whose LQ is greater than 1.Footnote 17 Four regions (Fuxin, Jilin, Sanya, and Shanwei) that do not meet the threshold of the innovation scale are excluded.Footnote 18 The twenty-nine retained regions (Beijing, Shenzhen, Nanjing, Hangzhou, Xi’an, Shanghai, Wuhan, Harbin, Zhuhai, Guangzhou, Guilin, Jinan, Chengdu, Hefei, Changsha, Nanchang, Qinhuangdao, Shenyang, Changchun, Fuzhou, Xiamen, Mianyang, Kunming, Dalian, Dongguan, Chongqing, Tianjin, Taiyuan, and Zhengzhou) are regarded as potential AI clusters. The potential AI clusters are illustrated in Fig. 2.

To investigate the local and external innovation connections of potential AI clusters, we calculate the total volume of intraregional and interregional collaborations for these clusters, respectively. The outcomes are depicted in Table 1. The high total volume of intraregional collaborations in clusters such as Beijing, Shenzhen, Nanjing, Guangzhou, Shanghai, Hangzhou, Zhuhai, Wuhan, Chengdu, Tianjin, Jinan, and others indicates their focus on internal innovation activities. The high total volume of interregional collaborations among clusters such as Beijing, Nanjing, Shenzhen, Hangzhou, Shanghai, Guangzhou, Wuhan, Chengdu, Jinan, Tianjin, Nanchang, Hefei, and others indicates their emphasis on external resource acquisition. In conclusion, all twenty-nine potential AI clusters have both intraregional and interregional innovation collaborations, satisfying the criteria for cluster identification.

Because the total volume of interregional collaborations exceeds that of intraregional collaborations in each AI cluster, we can conclude that interregional cooperation is the main form of collaboration for AI clusters. Further, the innovation network of interregional cooperation is constructed in this research. To be specific, we match each region involved in interregional cooperation patents with other regions.Footnote 19 Connections containing the AI clusters are regarded as network edges. Using the total collaboration volume as the weight of network nodes and the number of collaborations as the weight of network edges, we depict the innovation network for the AI clusters in Fig. 3. We find that the innovation network containing the AI clusters is highly dense. Beijing occupies an absolutely central position. The roles of Nanjing, Hangzhou, and Shanghai as significant cities in Eastern China are prominently highlighted. Shenzhen and Guangzhou, two major cities in Southern China, serve as pivotal hubs. Wuhan and Chengdu, representing Central and Western China, respectively, actively engage in innovation collaborations with other areas.

Descriptive statistics

This study performs descriptive statistics for all the variables. The outcomes are displayed in Table 2. The mean values of TI, IP, and NC are 5.898, 2.006, and 2.682, respectively. Moreover, the standard deviations of TI and IP are relatively high, which elucidates that gaps in both TI and IP exist across different AI clusters.

Correlation analysis

We observe a positive correlation between IP and TI from Table 3, which indicates that IP may enhance TI in the AI clusters. The correlation coefficients between each variable are less than 0.800. In addition, this research conducts a variance inflation factor (VIF) test. The maximum VIF of 3.410 is lower than 10, illustrating that there is no severe concern about multicollinearity in this study (e.g., Chen et al., 2024).

Regression analysis

The results of regression analysis of IP and TI are reported in Table 4. All p-values of AR(1) are less than 0.1, while those of AR(2) are greater than 0.1, indicating the presence of first-order autocorrelation but the absence of second-order autocorrelation in the random disturbance term. We thus accept the null hypothesis of “no autocorrelation in the random disturbance term”. Furthermore, the p-values of the Sargan test are all greater than 0.1, which illustrates that the problem of overidentification of instrumental variables does not exist, thereby allowing for estimation using System-GMM.

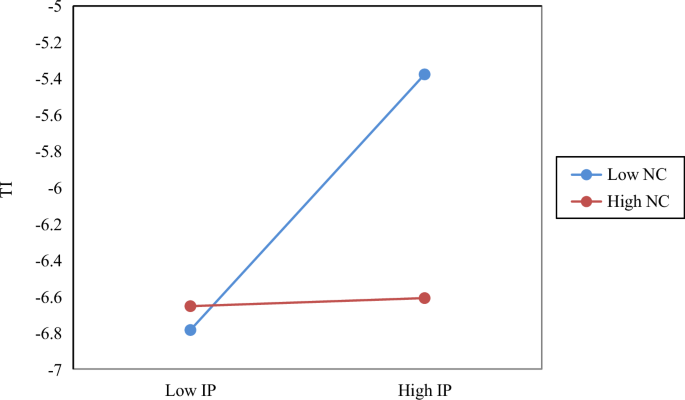

Model l contains only the control variables. The results displayed in Model 2 indicate that the coefficient of IP on TI is 0.037 and significant. IP can promote TI in the AI clusters. That is, the positive effects of IP on TI dominate over the negative ones. Moving on to Model 3, the interaction between IP and NC (i.e., IP*NC) is significantly negative, which illustrates that the high NC of clusters diminishes the positive impact of IP on TI in the AI clusters. Further, this paper visualizes the moderating effect of NC on the IP–TI connection. As shown in Fig. 4, IP responds to less TI with a high value of NC.

Robustness checks

Sensitivity analysis

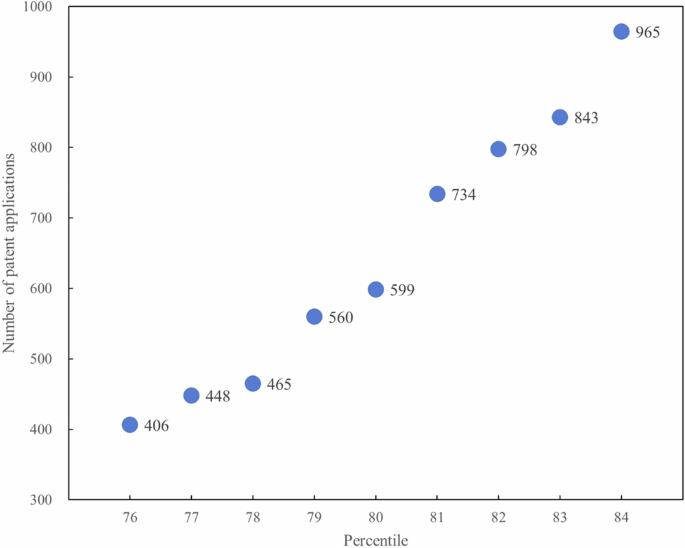

Quartiles are a scientifically and widely used classification method. In this section, we use the third quartile (i.e., the 75th percentile) instead of the 80th percentile for reidentifying potential clusters. The 75th percentile of patent applications for all regions is 386. The four regions (Fuxin, Jilin, Sanya, and Shanwei) are still excluded (see Supplementary Table B), which is consistent with the conclusion in Section “Cluster identification”. In addition, to avoid overlapping with the 75th percentile, we allow a fluctuation of ±4 percentage points around the 80th percentile and conduct multiple tests between the 76th and 84th percentiles. The results are shown in Fig. 5. The threshold for patent applications falls between 374 and 969 when a certain degree of error exists, and the aforementioned four regions remain excluded. Therefore, the conclusion of the twenty-nine AI clusters identified in this paper is robust.

Placebo test

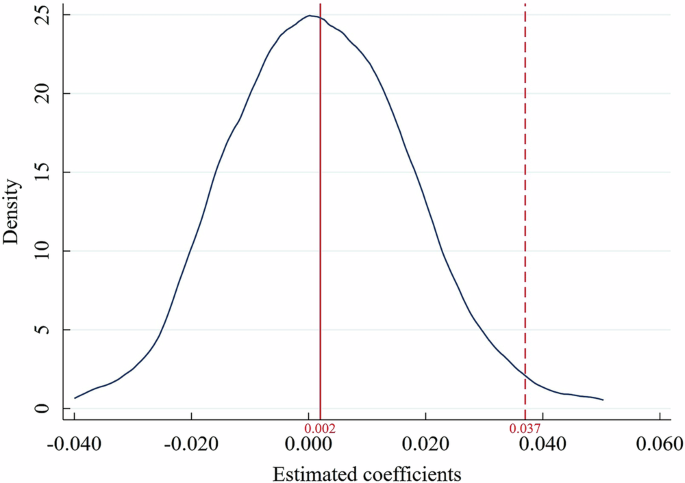

Conducting a placebo test serves to rule out the possibility that random factors unrelated to IP account for the observed effects, thereby guaranteeing a more reliable estimation. Drawing on the study of Zeng et al. (2024), we randomly shuffle IP across all clusters and then estimate the impact of randomly assigned IP on TI using System-GMM regression. This procedure is repeated 1000 times. If the correlation between IP and TI still exists, the previous conclusions are due to randomness rather than IP. The kernel density of the estimated coefficients plotted in Fig. 6 indicates that the coefficient of IP is 0.002, which is far from the real IP coefficient of 0.037. Therefore, the improvement of TI is driven by IP, not by random factors.

Policy penetration

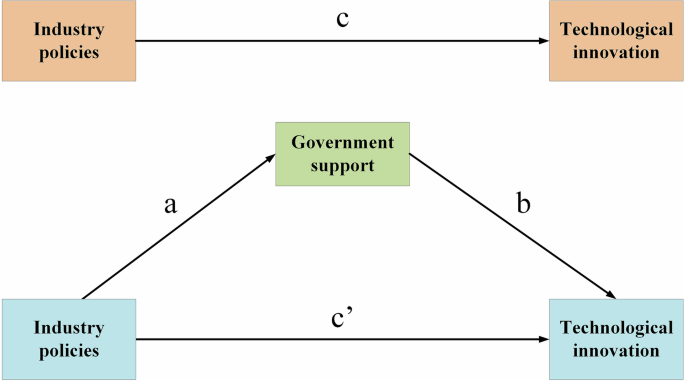

Measuring IP by the number of incentive policies may not reflect implementation rigor. Enterprises act as the principal agents of innovation (Lei and Xie, 2023). To assess the impact of IP more accurately, we examine whether IP effectively reaches enterprises in practice. To be specific, the penetration process for IP is achieved in the following sequence: the release of IP documents, the government support (GS) obtained by enterprises, and the generation of TI. Drawing on the study of Xu et al. (2023), this article constructs the following mediation effect model:

$${{\rm{TI}}^{\wedge}}_{\rm{jt}}={\varphi }_{1}+{\lambda }_{1}{{{\rm{TI}}}^{\wedge}}_{{\rm{jt}-1}}+{{\rm{cIP}}}_{{\rm{jt}}}+{\gamma }_{11}{{\rm{Control}}}_{{\rm{jt}}}+{\eta }_{{\rm{j}}}+{\varepsilon }_{{\rm{jt}}}$$

(4)

$${\rm{G}}{S}_{{\rm{jt}}}={\varphi }_{2}+{\lambda }_{2}{\rm{G}}{S}_{{\rm{jt}}-1}+{{\rm{aIP}}}_{{\rm{jt}}}+{\gamma }_{21}{{\rm{Control}}}_{{\rm{jt}}}+{\eta }_{{\rm{j}}}+{\varepsilon }_{{\rm{jt}}}$$

(5)

$${{\rm{TI}}^{\wedge}}_{\rm{jt}}={\varphi }_{3}+{\lambda }_{3}{{{\rm{TI}}}^{\wedge}}_{{\rm{jt}-1}}+{{\rm{c}}^{\prime} {\rm{IP}}}_{{\rm{jt}}}+{\rm{bG}}{S}_{{\rm{jt}}}+{\gamma }_{31}{{\rm{Control}}}_{{\rm{jt}}}+{\eta }_{{\rm{j}}}+{\varepsilon }_{{\rm{jt}}}$$

(6)

where \({{{\rm{TI}}}^{\wedge}}_{\rm{jt}}\) represents the number of invention patent applications of enterprise \({\rm{j}}\) in year \({\rm{t}}\). \({{\rm{IP}}}_{{\rm{jt}}}\) is the number of incentive policies in the cluster where enterprise \({\rm{j}}\) is located. \({{\rm{GS}}}_{{\rm{jt}}}\) is measured by the government subsidies of enterprise \({\rm{j}}\) (e.g., Chen and Wang, 2022b). \({\text{Control}}_{\text{jt}}\) represents control variables, including enterprise profitability (EP), capital structure (CS), and operating capacity (OC). EP is calculated by the return on total assets (e.g., Chen and Wang, 2022a). The asset‒liability ratio stands for CS (e.g., Peng and Tao, 2022). OC is represented by the asset turnover ratio (e.g., Xu and Chen, 2020). The other symbols serve the same purpose as Eq. (3).

The mediation mechanism is plotted in Fig. 7. If the coefficients c, a, and b are all significant, GS has a mediating effect (Zhong and Zhang, 2024).

We acquire an AI enterprise list from the China Stock Market and Accounting Research (CSMAR) database.Footnote 20 Based on geographical location, we select 125 firms within clusters as our research samples.Footnote 21 The enterprise data come from the CSMAR database. System-GMM estimation is applied to investigate the mediating impact of GS. As displayed in Table 5, all p-values for AR(1) are less than 0.1, while those for AR(2) and the Sargan test exceed 0.1; thus, the adoption of System-GMM is appropriate. The results show that the coefficients for IP → TI^, IP → GS, and GS → TI^ are all significantly positive at the 1% level. Hence, IP promotes TI^ by strengthening GS. The conclusion verifies the penetration effect of IP.

Changing the regression model

Poisson regression is usually used for models where the dependent variable is count data. In this study, the dependent variable is “excessively dispersed”, i.e., the mean is less than the variance.Footnote 22 We thereby adopt negative binomial regression. The results displayed in Table 6 indicate that the coefficient of IP is significantly positive and that the coefficient of interaction between IP and NC is significantly negative. Therefore, the empirical outcomes of this research are reliable.

Brand Stories

Best Laptop for Artificial Intelligence

Artificial Intelligence is no longer the future, it’s the present. From deep learning and data science to neural networks and natural language processing, AI is everywhere. And if you’re diving into AI development, machine learning, or research, one thing is absolutely essential…

A high-performance laptop that can handle intensive tasks, large datasets, and GPU acceleration without breaking a sweat.

So, in today’s blogpost, I’m breaking down the Top 5 Best Laptops for Artificial Intelligence from budget options to professional-grade machines. Whether you’re a student, researcher, or engineer, there’s something here for you. Let’s get started!”

When choosing a laptop for AI tasks, you need to focus on three major components:

Processor (CPU): AI workloads need serious computing power. An Intel i7 or Ryzen 9 CPU from the latest generation is the baseline.

Graphics (GPU): If you’re training models in TensorFlow, PyTorch, or using GPU acceleration, a dedicated NVIDIA GPU RTX 2070 or above is a must.

RAM & Storage: Aim for at least 16GB of RAM and 512GB–1TB SSD storage for smooth data processing, caching, and managing large datasets.

Battery Life: You’ll also want a solid battery (at least 7–8 hours), a good display, and a build that can handle constant work or travel.

If you’re looking for raw AI performance without paying a premium price, the ASUS Zephyrus G14 is an unbeatable value.

This machine features the powerful AMD Ryzen 9 processor, paired with a dedicated NVIDIA GPU and 16GB of RAM making it perfect for machine learning, AI model training, and multitasking across data-intensive applications.

The 1TB SSD ensures you have more than enough space for large datasets, code libraries, and even virtual environments.

What makes this laptop shine is its balanced build it’s lightweight and portable, yet doesn’t compromise on performance.

Whether you’re working with TensorFlow, PyTorch, or diving into deep learning frameworks, the Zephyrus G14 delivers stable performance with great thermals.

Its design is sleek and minimal, appealing to both students and professionals alike. You get workstation-level specs packed into a 14-inch chassis a powerful combo for AI on the move.

The MSI P65 Creator is purpose-built for creative professionals but its specs make it an absolute dream for AI developers and researchers.

Powered by a high-end Intel Core i7 processor and a massive 32GB of RAM, this laptop can breeze through AI workloads with ease. Its 1TB SSD ensures lightning-fast file access and plenty of storage for massive datasets and project files.

Plus, it comes with a dedicated GPU that accelerates deep learning tasks significantly.

One of its standout features is the color-accurate, anti-glare display ideal for visualizing data, building AI interfaces, or multitasking across several windows.

This laptop is also well-known for its quiet cooling system, so you can train models overnight or work in silence.

Built with a slim aluminum chassis, it’s surprisingly portable for a workstation-class device.

If you want seamless, stutter-free performance across your AI workflows, the P65 Creator is a smart and reliable choice.

The Razer Blade 15 is an excellent all-rounder capable of handling AI workloads, everyday computing, and even some light gaming during downtime.

Under the hood, you’ll find a 10th Gen Intel Core i7 processor and a GTX 1650 Ti GPU not the highest-end graphics card, but still solid enough for training mid-size models and running frameworks like Keras, Scikit-learn, or JupyterLab with GPU acceleration.

While it only includes 8GB of RAM and 256GB of storage out of the box, both are upgradeable, which means you can scale up your system as your needs grow.

The build quality is premium, with a durable aluminum chassis and a beautiful 15.6-inch display.

Whether you’re studying AI, doing part-time freelance work, or transitioning into full-time development, the Blade 15 provides the right blend of portability, upgradability, and raw computing muscle to support your journey.

If you’re stepping into the AI space and want something capable yet budget-friendly, the ASUS VivoBook K571 hits a sweet spot.

It’s powered by an Intel Core i7 processor and NVIDIA GTX 1650 graphics, which gives you enough power to train smaller models, run simulations, or experiment with various machine learning frameworks.

With 1TB of storage, you also won’t run out of space when storing datasets, scripts, or libraries.

Although it comes with 8GB RAM, the system performs well for beginner to intermediate-level AI workloads and multitasking.

The display is sharp and bright, and the keyboard is comfortable for long coding sessions.

While it may not handle extremely heavy GPU training jobs, it’s more than capable for learners, students, or entry-level professionals exploring neural networks, AI ethics, or NLP models.

For under budget, this VivoBook delivers far more than expected ideal for those starting out.

The Microsoft Surface Book 2 blends power, portability, and premium build quality making it a top-tier option for AI developers who value versatility.

It’s equipped with a capable Core i7 processor and a dedicated NVIDIA GTX 1050 GPU, which allows it to perform well in AI development environments like TensorFlow and Azure ML Studio.

The 13.5-inch display is crisp and touch-enabled, perfect for interactive visualizations, quick sketches, or note-taking with a stylus.

While the 8GB RAM and 1.9GHz base speed might seem modest, its seamless integration with Microsoft’s ecosystem and incredible portability make it a productivity beast.

It also doubles as a tablet useful for presentations, whiteboarding, or reading research papers.

Surface Book 2 is best suited for professionals and educators who need a dependable, multifunctional machine for AI development, teaching, and mobile workspaces without compromising on quality or battery life.

Conclusion

There you have it the top 5 best laptops for AI and machine learning. Whether you’re working with TensorFlow, Keras, Scikit-learn, or simply experimenting with AI algorithms, these machines will support your journey.

If you’re just starting out, the ASUS VivoBook is a great budget option. Need professional smoothness? Go for the MSI P65. But for the best all-round performance and value, I highly recommend the Zephyrus G14.

Got any questions or still can’t decide which one’s right for you? Drop your thoughts in the comments I respond to every single one!

Brand Stories

Giving voice to machines: The NLP breakthrough in artificial intelligence

What is Natural Language Processing?

Natural Language Processing (NLP) is a branch of artificial intelligence (AI) that enables machines to understand, interpret, generate, and respond to human language in a way that is both meaningful and context-aware.

It combines computational linguistics (rule-based modeling of human language) with machine learning, deep learning, and statistics to enable intelligent language-based applications.

How NLP revolutionised AI tools

|

Before NLP |

After NLP |

| AI systems were logic-driven, unable to process unstructured data like human speech or text. | NLP allowed machines to understand, generate, and translate human languages, making human-computer interaction seamless. |

Major contributions

- Conversational AI (e.g. Chatbots, Virtual Assistants like ChatGPT, Alexa)

- Search Engines (Google uses NLP for semantic search)

- Language Translation (Google Translate, DeepL)

- Sentiment Analysis (used in governance, business, and elections)

- Speech Recognition (used in digital governance, smart devices)

- Accessibility Tools (voice-to-text, language simplification)

Key functions of NLP

|

Function |

Explanation |

| Text Classification | Categorising text (e.g., spam detection, topic tagging) |

| Named Entity Recognition (NER) | Identifying people, places, organisations in text |

| Sentiment analysis | Detecting emotional tone in speech/text |

| Machine translation | Translating between languages |

| Speech recognition | Converting spoken language to text |

| Text summarisation | Condensing long articles or documents into key points |

| Question answering | Forming answers based on context and queries |

Applications in governance & civil services

| Field | Application |

| E-Governance | Voice-based citizen query resolution in vernacular languages |

| Policy monitoring | Sentiment analysis of public opinion on policies |

| Grievance redressal | Automating categorization and escalation of complaints |

| Language translation | Real-time translation for multilingual communication |

| Education | AI tutors, exam evaluation using NLP tools |

| Judiciary | Legal document summarization, precedent retrieval |

| Parliament | Automated transcription and translation of speeches |

Limitations of NLP

|

Limitation |

Explanation |

| Ambiguity | Words have multiple meanings (e.g., ‘bank’ – river or financial) |

| Context sensitivity | Difficulty in understanding sarcasm, idioms, or cultural nuances |

| bias | NLP models may reflect biases present in training data |

| Data dependency | Requires vast, high-quality data sets for training |

| Multilingual complexity | Accurate understanding across India’s 22 official languages is still a challenge |

| Security risks | Can be misused to generate fake news or deepfakes using text manipulation |

| Legal and ethical issues | Privacy, consent, and misinformation concerns |

Civil services perspective – Analytical box

|

Theme |

NLP’s role |

| Digital India | Empowers inclusive digital interfaces for rural users |

| Linguistic Diversity | Bridges communication gaps through AI translation |

| Ease of Governance | Automates public services and reduces bureaucratic load |

| Policy Making | Extracts insights from citizen feedback, social media |

| Disaster Management | Processes emergency calls/messages in multiple languages |

| Security & Surveillance | Monitors harmful speech/text (e.g., extremism online) |

Conclusion

NLP stands at the heart of the AI revolution, driving a new era of intelligent, human-centric technology. For civil servants and policymakers, its strategic use can enhance transparency, efficiency and inclusivity in governance.

However, ethical deployment, regulation, and investment in Indian language NLP research are essential to ensure equitable benefits across all sections of society.

Brand Stories

3 No-Brainer Artificial Intelligence (AI) Stocks to Buy Now and Hold Forever

Long-term competitive advantages ensure these companies aren’t some flash-in-the-pan AI stocks.

Excitement around artificial intelligence (AI) and its potential impact on businesses has led to soaring stock prices for many of the biggest tech companies. Nvidia, for example, has seen its stock price grow more than tenfold since the release of ChatGPT in late 2022, now topping $4 trillion in market cap.

Some investors may feel like they’ve missed the boat and they’re too late to buy AI stocks at a good price. It’s important to consider that today’s AI winners might not be the biggest companies to benefit from advancements in artificial intelligence over the long run. Finding a company that’s making excellent progress right now with sustainable long-term competitive advantages could end up being an even better stock to own when the dust settles.

These three companies are all well positioned to benefit from the continued growth and advancement in artificial intelligence. Their stocks are all attractive at today’s prices, too, which means you can buy them now and hold them forever.

Image source: Getty Images.

1. Amazon

Amazon (AMZN -0.23%) is home to the largest public cloud computing platform in the world, Amazon Web Services, or AWS. The segment generated $116.4 billion over the last 12 months, roughly 50% larger than its next-closest competitor, Microsoft‘s Azure.

Some have expressed concern about AWS for a few reasons. First, it was caught flat-footed as the generative AI opportunity was getting off the ground. That led it to cede market share to Microsoft and others who were earlier to invest in the space. However, it quickly course corrected, releasing its Bedrock platform, and it’s seeing triple-digit growth in AI services. As such, it’s been able to maintain most of its market share in a rapidly growing market (even though overall revenue growth has slowed to the high-teens).

The second reason is that AWS saw a significant decline in operating margin in the second quarter. Management explained half of that decline was due to the timing of stock-based compensation. The rest is explained by Amazon’s significant investments in capacity, as it notes the business remains capacity constrained. Over time, investors should see margin tick back up. It’s worth noting AWS still commands higher margins than its smaller competitors.

Meanwhile, the rest of Amazon looks strong. Its retail operations are seeing improved margins quarter after quarter, thanks in part to a strong advertising business. The international segment is notably on its way to becoming a meaningful contributor to operating income after years of investment.

The stock fell following the release of its second-quarter earnings based on a disappointing outlook. But the long-term potential for Amazon, particularly in AWS, remains strong. The pullback in price looks like an opportunity for long-term investors to buy this AI leader.

2. Salesforce

Salesforce (CRM -0.29%) provides a suite of software often found at the center of many enterprises’ operations. The company has seen very good results with its growing set of cloud-based software solutions, but the standout recently has been its Data Cloud offering. Data Cloud provides a single platform to aggregate all of a company’s data to create actionable insights from a single source.

Data Cloud recurring revenue grew to $1 billion in Salesforce’s most recent quarter, up 120% year over year. It’s seeing strong attachment, with 60 of its top 100 deals including Data Cloud in the contract. And the most recent product built on top of Data Cloud, Agentforce, is seeing very strong adoption.

Agentforce allows businesses to build AI agents that can execute tasks or provide customer service with minimal human intervention. The key to building successful AI agents is access to pertinent data, which is exactly what Data Cloud brings to the table. Management says it’s made 8,000 deals with Agentforce since its launch last fall, representing $100 million in revenue. That makes it Salesforce’s fastest-growing product ever.

Considering Salesforce’s software suite is entrenched in the operations of so many enterprises, it’s in a prime position to benefit from growing spend on artificial intelligence, particularly through Data Cloud. It’s unlikely to lose that position. In fact, its expanding suite of software tools only serves to increase the switching costs for a company. With shares trading for just 22 times forward earnings estimates, Salesforce looks like a great buy at today’s price.

3. Meta Platforms

Meta Platforms (META 0.92%) may be the biggest investor in artificial intelligence in the world. It’s on track to spend between $66 billion and $72 billion on capital expenditures, and it’s only building compute power for itself (unlike the other hyperscalers, who serve cloud customers). There’s a good reason Meta is spending more than everyone else on artificial intelligence; it could be the biggest beneficiary of all generative AI has to offer.

Signs of that are already coming through. In the second quarter, Meta’s ad prices climbed 9% year over year and impressions grew 11%. CEO Mark Zuckerberg notes a significant portion of that improvement came from its AI-recommendation model. Additionally, time spent on Facebook and Instagram increased 5% and 6%, respectively, thanks to bigger AI models.

But the future is bright, too. Meta’s generative AI tools for ad creative are seeing strong adoption. Zuckerberg notes “a meaningful… [percentage] of our ad revenue now… [comes] from campaigns using one of our Generative AI features.” Long term, Meta is working on an AI agent that can develop and test ad creatives autonomously.

Meta’s AI chatbot now boasts over 1 billion users, creating an additional channel for monetization over the long run. Meta only recently started putting ads in WhatsApp and Threads, which should provide additional ad revenue as advertising on Meta grows increasingly easier thanks to generative AI capabilities.

Meta’s seeing excellent financial results from the growing adoption of its advertising platform and increased engagement from its users. Revenue climbed 22% last quarter and operating income grew an impressive 38%. Meta’s growing depreciation expense will likely weigh on earnings going forward as long as it continues to ramp up spending, but if it continues to produce top-line growth like last quarter, that’s easily digestible.

If you back out the depreciation expense using EBITDA, Meta shares trade for an attractive price with enterprise value around 16 times forward EBITDA estimates. Even on a more traditional forward P/E valuation, Meta shares look to be well worth the 27 times multiple you’ll have to pay for the stock today.

Adam Levy has positions in Amazon, Meta Platforms, Microsoft, and Salesforce. The Motley Fool has positions in and recommends Amazon, Meta Platforms, Microsoft, Nvidia, and Salesforce. The Motley Fool recommends the following options: long January 2026 $395 calls on Microsoft and short January 2026 $405 calls on Microsoft. The Motley Fool has a disclosure policy.

-

Brand Stories3 weeks ago

Brand Stories3 weeks agoBloom Hotels: A Modern Vision of Hospitality Redefining Travel

-

Brand Stories2 weeks ago

Brand Stories2 weeks agoCheQin.ai sets a new standard for hotel booking with its AI capabilities: empowering travellers to bargain, choose the best, and book with clarity.

-

Destinations & Things To Do3 weeks ago

Destinations & Things To Do3 weeks agoUntouched Destinations: Stunning Hidden Gems You Must Visit

-

Destinations & Things To Do2 weeks ago

Destinations & Things To Do2 weeks agoThis Hidden Beach in India Glows at Night-But Only in One Secret Season

-

AI in Travel3 weeks ago

AI in Travel3 weeks agoAI Travel Revolution: Must-Have Guide to the Best Experience

-

Brand Stories1 month ago

Brand Stories1 month agoVoice AI Startup ElevenLabs Plans to Add Hubs Around the World

-

Brand Stories4 weeks ago

Brand Stories4 weeks agoHow Elon Musk’s rogue Grok chatbot became a cautionary AI tale

-

Brand Stories2 weeks ago

Brand Stories2 weeks agoContactless Hospitality: Why Remote Management Technology Is Key to Seamless Guest Experiences

-

Asia Travel Pulse1 month ago

Asia Travel Pulse1 month agoLooking For Adventure In Asia? Here Are 7 Epic Destinations You Need To Experience At Least Once – Zee News

-

AI in Travel1 month ago

AI in Travel1 month ago‘Will AI take my job?’ A trip to a Beijing fortune-telling bar to see what lies ahead | China

You must be logged in to post a comment Login