Article content

Eight New Brunswick tourist attractions are joining the federal government’s offer of free or discounted admission this summer.

Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

Researchers at the University of Illinois Urbana-Champaign and the University of Virginia have developed a new model architecture that could lead to more robust AI systems with more powerful reasoning capabilities.

Called an energy-based transformer (EBT), the architecture shows a natural ability to use inference-time scaling to solve complex problems. For the enterprise, this could translate into cost-effective AI applications that can generalize to novel situations without the need for specialized fine-tuned models.

In psychology, human thought is often divided into two modes: System 1, which is fast and intuitive, and System 2, which is slow, deliberate and analytical. Current large language models (LLMs) excel at System 1-style tasks, but the AI industry is increasingly focused on enabling System 2 thinking to tackle more complex reasoning challenges.

Reasoning models use various inference-time scaling techniques to improve their performance on difficult problems. One popular method is reinforcement learning (RL), used in models like DeepSeek-R1 and OpenAI’s “o-series” models, where the AI is rewarded for producing reasoning tokens until it reaches the correct answer. Another approach, often called best-of-n, involves generating multiple potential answers and using a verification mechanism to select the best one.

However, these methods have significant drawbacks. They are often limited to a narrow range of easily verifiable problems, like math and coding, and can degrade performance on other tasks such as creative writing. Furthermore, recent evidence suggests that RL-based approaches might not be teaching models new reasoning skills, instead just making them more likely to use successful reasoning patterns they already know. This limits their ability to solve problems that require true exploration and are beyond their training regime.

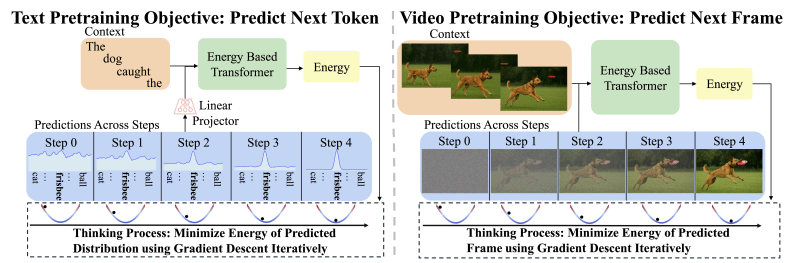

The architecture proposes a different approach based on a class of models known as energy-based models (EBMs). The core idea is simple: Instead of directly generating an answer, the model learns an “energy function” that acts as a verifier. This function takes an input (like a prompt) and a candidate prediction and assigns a value, or “energy,” to it. A low energy score indicates high compatibility, meaning the prediction is a good fit for the input, while a high energy score signifies a poor match.

Applying this to AI reasoning, the researchers propose in a paper that devs should view “thinking as an optimization procedure with respect to a learned verifier, which evaluates the compatibility (unnormalized probability) between an input and candidate prediction.” The process begins with a random prediction, which is then progressively refined by minimizing its energy score and exploring the space of possible solutions until it converges on a highly compatible answer. This approach is built on the principle that verifying a solution is often much easier than generating one from scratch.

This “verifier-centric” design addresses three key challenges in AI reasoning. First, it allows for dynamic compute allocation, meaning models can “think” for longer on harder problems and shorter on easy problems. Second, EBMs can naturally handle the uncertainty of real-world problems where there isn’t one clear answer. Third, they act as their own verifiers, eliminating the need for external models.

Unlike other systems that use separate generators and verifiers, EBMs combine both into a single, unified model. A key advantage of this arrangement is better generalization. Because verifying a solution on new, out-of-distribution (OOD) data is often easier than generating a correct answer, EBMs can better handle unfamiliar scenarios.

Despite their promise, EBMs have historically struggled with scalability. To solve this, the researchers introduce EBTs, which are specialized transformer models designed for this paradigm. EBTs are trained to first verify the compatibility between a context and a prediction, then refine predictions until they find the lowest-energy (most compatible) output. This process effectively simulates a thinking process for every prediction. The researchers developed two EBT variants: A decoder-only model inspired by the GPT architecture, and a bidirectional model similar to BERT.

The architecture of EBTs make them flexible and compatible with various inference-time scaling techniques. “EBTs can generate longer CoTs, self-verify, do best-of-N [or] you can sample from many EBTs,” Alexi Gladstone, a PhD student in computer science at the University of Illinois Urbana-Champaign and lead author of the paper, told VentureBeat. “The best part is, all of these capabilities are learned during pretraining.”

The researchers compared EBTs against established architectures: the popular transformer++ recipe for text generation (discrete modalities) and the diffusion transformer (DiT) for tasks like video prediction and image denoising (continuous modalities). They evaluated the models on two main criteria: “Learning scalability,” or how efficiently they train, and “thinking scalability,” which measures how performance improves with more computation at inference time.

During pretraining, EBTs demonstrated superior efficiency, achieving an up to 35% higher scaling rate than Transformer++ across data, batch size, parameters and compute. This means EBTs can be trained faster and more cheaply.

At inference, EBTs also outperformed existing models on reasoning tasks. By “thinking longer” (using more optimization steps) and performing “self-verification” (generating multiple candidates and choosing the one with the lowest energy), EBTs improved language modeling performance by 29% more than Transformer++. “This aligns with our claims that because traditional feed-forward transformers cannot dynamically allocate additional computation for each prediction being made, they are unable to improve performance for each token by thinking for longer,” the researchers write.

For image denoising, EBTs achieved better results than DiTs while using 99% fewer forward passes.

Crucially, the study found that EBTs generalize better than the other architectures. Even with the same or worse pretraining performance, EBTs outperformed existing models on downstream tasks. The performance gains from System 2 thinking were most substantial on data that was further out-of-distribution (different from the training data), suggesting that EBTs are particularly robust when faced with novel and challenging tasks.

The researchers suggest that “the benefits of EBTs’ thinking are not uniform across all data but scale positively with the magnitude of distributional shifts, highlighting thinking as a critical mechanism for robust generalization beyond training distributions.”

The benefits of EBTs are important for two reasons. First, they suggest that at the massive scale of today’s foundation models, EBTs could significantly outperform the classic transformer architecture used in LLMs. The authors note that “at the scale of modern foundation models trained on 1,000X more data with models 1,000X larger, we expect the pretraining performance of EBTs to be significantly better than that of the Transformer++ recipe.”

Second, EBTs show much better data efficiency. This is a critical advantage in an era where high-quality training data is becoming a major bottleneck for scaling AI. “As data has become one of the major limiting factors in further scaling, this makes EBTs especially appealing,” the paper concludes.

Despite its different inference mechanism, the EBT architecture is highly compatible with the transformer, making it possible to use them as a drop-in replacement for current LLMs.

“EBTs are very compatible with current hardware/inference frameworks,” Gladstone said, including speculative decoding using feed-forward models on both GPUs or TPUs. He said he is also confident they can run on specialized accelerators such as LPUs and optimization algorithms such as FlashAttention-3, or can be deployed through common inference frameworks like vLLM.

For developers and enterprises, the strong reasoning and generalization capabilities of EBTs could make them a powerful and reliable foundation for building the next generation of AI applications. “Thinking longer can broadly help on almost all enterprise applications, but I think the most exciting will be those requiring more important decisions, safety or applications with limited data,” Gladstone said.

Trusted News Since 1995

A service for travel industry professionals

·

Monday, July 21, 2025

·

832,504,696

Articles

·

3+ Million Readers

Shocking footage posted to the Instagram account TouronsOfYellowstone (@touronsofyellowstone) brought attention to the moment four bikers cut through the Grand Prismatic at Yellowstone National Park.

“The 4 of them were biking from the tree line towards the boardwalk when, as they were nearing the actual hot springs, several people yelled at them to turn around,” the caption reads.

The Grand Prismatic in Yellowstone is the largest hot spring in the United States, known for its geysers. Microbial mats have played a major role in scientific research and help preserve the unique geothermal ecosystem. Biking over the springs is not only dangerous for bikers, but it also has considerable impacts on the landscape.

“I have never seen anything like this in Yellowstone ever after many decades,” TouronsOfYellowstone wrote. “This is next level! I just don’t understand the thought process these people had to think that it was okay for them to not just walk but to ride their bikes on the Grand Prismatic.”

Infiltrating ecosystems meant to be preserved can lead to a slew of legal issues. Some people who have done so have ended up with $5,000 in fines. Risking safety is also a dangerous factor. Geysers such as the Grand Prismatic have been known to seriously injure or kill people who have broken safety protocols.

Brandon Gauthier, Yellowstone’s chief safety officer, explained that the park tries “to educate people starting when they come through the gate.” Gauthier further stated that there is “a fine line between giving visitors a chance to get close to popular attractions and ruining the natural landscapes that national parks were created to preserve.”

Due to past deaths, the park continues to emphasize how dangerous such actions can be.

Trespassing can further ruin biodiversity. Tourist interference has caused water pollution, introduced invasive species, and damaged microbial mats, according to some reports. Biking over the ecosystem is undoubtedly another massive blow to the preservation of the geysers.

Disregarding safety signs and regulations can also make wildlife interactions more likely, endangering both humans and animals. Animals that injure humans, whether they’re provoked or not, may be euthanized.

Commenters were appalled by the tourists’ behavior.

💡Upway makes it easy to find discounts of up to 60% on premium e-bike brands

“They should all be arrested immediately, fined and banned from all national parks forever,” voiced one angry user.

Another user commented, “I’ve said it before, the possibility of being boiled alive and turned into goo does not scare people enough.”

To avoid legal, safety, and biodiversity issues, the solution is clear: Follow the rules when enjoying national parks.

Join our free newsletter for good news and useful tips, and don’t miss this cool list of easy ways to help yourself while helping the planet.

A short list of staples selected by Ottawa now offer free admission for children aged 17 and under and 50 per cent off for those 18 to 24

Eight New Brunswick tourist attractions are joining the federal government’s offer of free or discounted admission this summer.

Advertisement 2

Article content

The Beaverbrook Art Gallery, Kings Landing Historical Settlement, Ministers Island, Cape Enrage, and Fort La Tour in Saint John are among a short list of cultural and historical staples in the province selected by Ottawa to offer free admission for children aged 17 and under and 50 per cent off admission fees for youth aged 18 to 24.

That’s with the feds saying they’re paying to offset admission costs.

Mark Carney ran on a federal election campaign promise to offer free or discounted admission to some of the country’s most iconic places through what he called a “Canada Strong Pass” with hopes to see more Canadians vacationing at home this summer.

The initial announcement included free admission for all visitors to national historic sites, national parks, and national marine conservation areas administered by Parks Canada and a 25 per cent discount on camping fees until Sept. 2.

Article content

Advertisement 3

Article content

VIA Rail travel is free for children aged 17 and under when accompanied by an adult with a 25 per cent discount for young adults aged 18 to 24.

National museums also have free admission for children 17 and under and a 50 per cent discount for 18 to 24.

The feds then suggested other attractions may join in.

It has now announced that 86 provincial and territorial museums and galleries will be participating.

In New Brunswick, there’s eight:

Advertisement 4

Article content

“Provincial and territorial museums and galleries were invited to join the Canada Strong Pass initiative, with government of Canada support helping offset admission costs,” the federal government said in a statement. “These museums are in addition to the national museums in Canada that are offering the same benefits.

“Now is the perfect time for Canadians to explore their rich cultural history and traditions by visiting and learning at any of these wonderful museums.”

In a statement, Beaverbrook Art Gallery spokesperson Curtis Richardson said Ottawa invited it to submit an application to the Canada Strong Pass Initiative under the Museums Assistance Program through Canadian Heritage.

“The funding they offer supports the costs associated with implementing the Canada Strong Pass, this includes help compensating for lost revenues,” Richardson said, believing the dollars will be enough to cover any loss.

Advertisement 5

Article content

He added that the gallery is “confident with our admission projections that we won’t incur any losses by being a part of this initiative.”

The feds confirmed that each eligible organization had to complete an application with information including their total admission revenues for the last completed fiscal year.

Funding of up to 15 per cent of total admission revenues for the last year is then provided, up to a maximum of $1 million.

The discounts are available until Sept. 2.

While the program is called the Canada Strong Pass, no registration or physical pass is actually necessary – just show up and enjoy the benefits offered at participating establishments, according to the feds.

The program is open to all visitors, whether you are Canadian or coming from abroad.

Advertisement 6

Article content

The discounts are also on top of one recently announced by the Holt government, which has discounted all provincial park passes for New Brunswickers by 25 per cent.

“We are doing this to help make vacationing in New Brunswick and staying in New Brunswick and getting outside in New Brunswick with your families more affordable,” Holt said at an announcement held at Fundy Trail Provincial Park in May.

The new Fundy Trail Provincial Park is one of the seven parks with discounted admission fees this season.

The six others are New River Beach, Murray Beach, Parlee Beach, Mount Carleton, Le Village Historique Acadien and Hopewell Rocks provincial parks.

Six other provincial parks don’t charge entrance fees.

More than 1.1 million visitors took in New Brunswick’s provincial parks in the 2024-25 fiscal year. Last year, New Brunswickers accounted for 235,000 paid daily visits.

Article content

Amazon weighs further investment in Anthropic to deepen AI alliance

How Elon Musk’s rogue Grok chatbot became a cautionary AI tale

Looking For Adventure In Asia? Here Are 7 Epic Destinations You Need To Experience At Least Once – Zee News

UK crime agency arrests 4 people over cyber attacks on retailers

Voice AI Startup ElevenLabs Plans to Add Hubs Around the World

‘Will AI take my job?’ A trip to a Beijing fortune-telling bar to see what lies ahead | China

EU pushes ahead with AI code of practice

ChatGPT — the last of the great romantics

CheQin.ai Redefines Hotel Booking with Zero-Commission Model

Humans must remain at the heart of the AI story

You must be logged in to post a comment Login